Motivation

Compilers make use of many different algorithms for numerous tasks in the different phases of the compiler. They come from different algorithmic areas, like graph problems, or searching, optimization etc.

In the lecture, we have encountered some problems (and actually there might be more later), where we said:

A worklist algorithm would be a good idea to solve it!

Sometimes I sketch a worklist algorithm, and sometimes I just mention such an algorithm, and use instead a solution that does not bother with a worklist formulation.

But I never nail down what worklist algorithms or worklists actually are. Since, as said, worklist algorithms show up at different places in the lecture and show up in more places inside or outside a compiler, this post tries to shed some light on the concept.

For concreteness sake, I won’t make a general discussion of principles of worklist algorithms as such, nor try a panoramic overview over different applications. Instead, we discuss it mainly in the context of the calculation of the first-sets in the chapter about parsing. So the text is best read together with the material about the first-sets and I won’t repeat here the definition of that concept and what role it plays in parsing.

First-set of a non-terminal (simple version and no worklist)

We take, for the discussion, the simplified version of the algo, namely the one that does not have to deal with so-called epsilon-transitions. Those add an extra layer of complication as far as the first-sets are concerned, though the principle of the worklist algorithm is the same. So we take the simpler one for illustration.

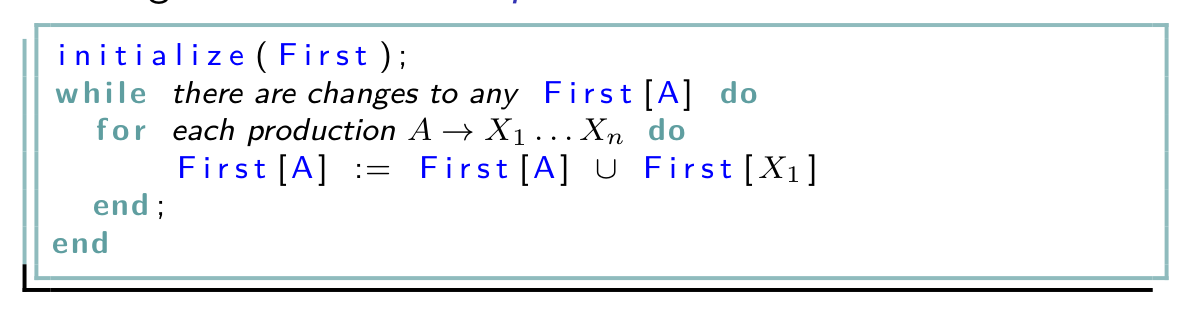

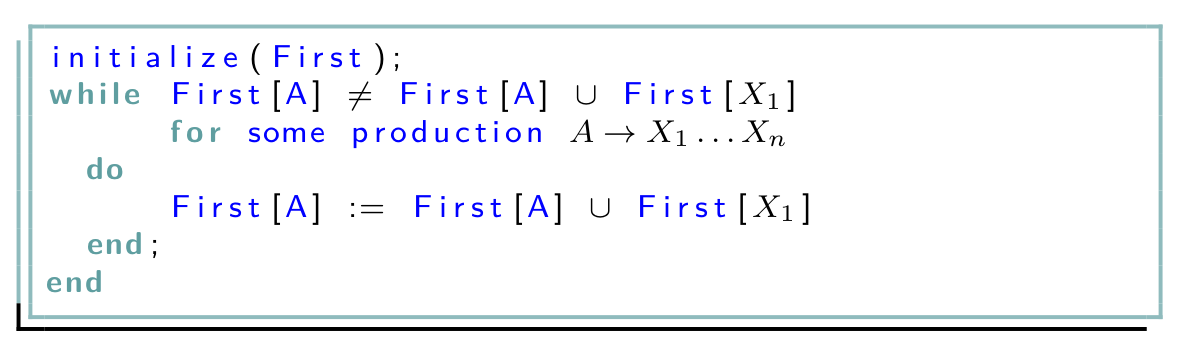

Here is the pseudo-code of the non-worklist algo for the simplified version of the first-set calculation:

This formulation of the algo makes no effort to focus where work needs to be done, thereby wasting time. The “work” that needs to be done are the steps in the body of the loops, here simply updating the information about the first-sets in the corresponding array, increasing that information until saturation.

Run of the algo on a simple example

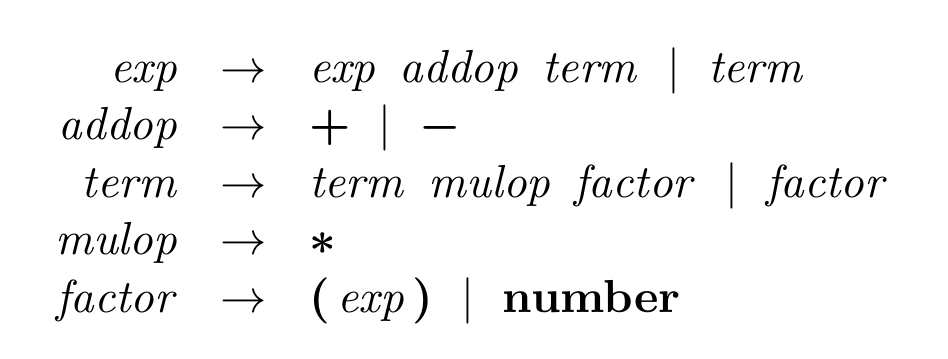

The algorithm is illustrated in the lecture by a standard example, a grammar for arithmetic expressions:

The following table illustrate the run of the algorithm, going through different passes (the picture from the lecture is taken from K. Louden’s book ``Compiler Construction: Principles and Practice’’):

The three passes shown in the table correspond to three iterations of the outer while-loop. Actually, the algo goes through the outer loop 4 times, so there is a 4th pass. In that last round, the algo detects that nothing more changes compared to pass 3, so after the 4th round, the algo terminates.

In the table, many slots are empty. That are the cases where nothing changes, i.e., where the corresponding production is treated, but updating the array does not actually increase the information in the corresponding slot, it leaves it unchanged. So that’s wasted work.

How do improve that?

The worklist algo (or worklists algos in general) improves that, avoid unnecessary work, and additional and in connection with that, to organize the work rationally.

In this example, the work to avoid is the single line in the body of the inner loop. If one could do the update only in those case where actually something changes, corresponding to the non-empty slots of the table, that could be an improvement.

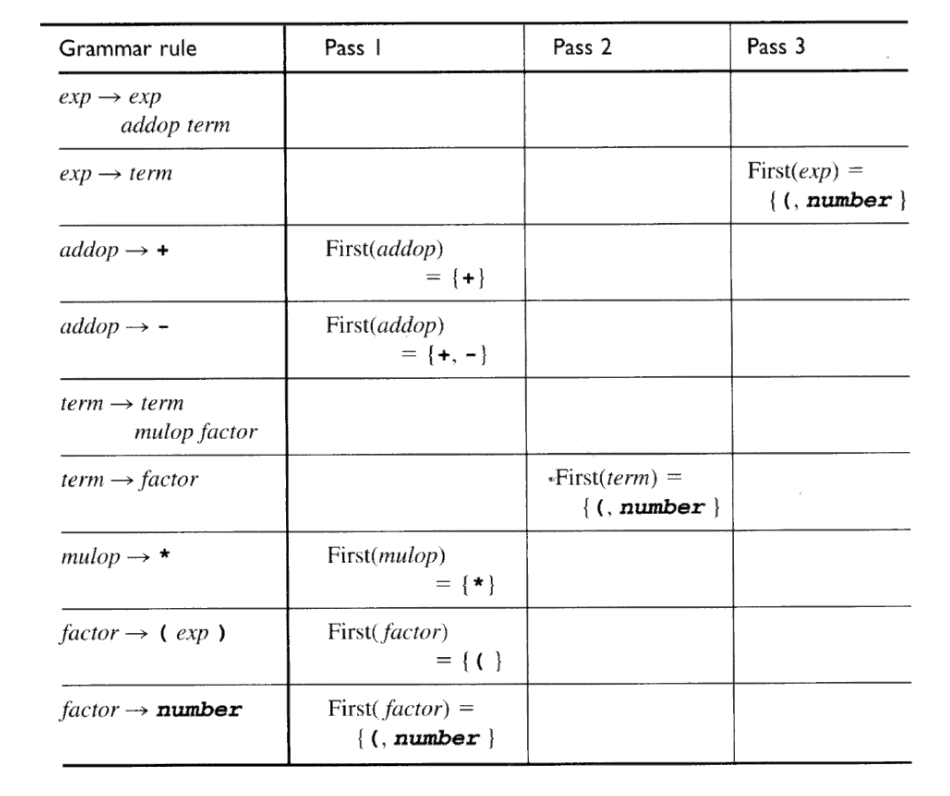

One could reformulate the algo as follows:

So, this version calculates an overview over those places which really require an update. For each pass, it stores those in a worklist, works it off to do the required work. This way, it skips over the useless updates as they are not contained in that list. Never mind, that this time a repeat-until is used instead of while-loop, that’s irrelevant for the discussion here.

What’s wrong with that?

Skipping work sounds tempting, but to call the code an improvement makes basically no sense… The worklist, constructed at the end of the loop-body, calculates exactly the places where work needs to be done, if any, in the next pass. It does so by checking if First(A) $\union$ First(X_1) is different from First(A). But if there is a difference, the major part of the work has been done already, namely doing the union operation (and additionally a comparison on top). So checking first all productions or slots, that require actual work, i.e., where a real update would happen if one would do it, and then focusing on those where that is the case does not really bring anything. So one replaced the routine repeated update of all productions with a (repeated) check of all productions to find out precisely which one to use for an update and which not, and that does not save anything. Unless it’s the act of updating itself which dominates the costs, but that is implausible.

Doing away with passes and with the worklist

The original version as well as the previous one works in passes or rounds. If, in each pass, the task are tackled in the same order, one could call it a round-robin strategy. Both versions do that, the first does the update indiscriminately for all productions, the second one focuses on each round on exactly the productions where the first-set information needs an update, and employs a worklist to manage that.

The following version does it slightly differently: it checks if a production leads to an update, and if so, does the required update immediately. In that case, no data structure is needed that remembers for a while that some work needs to be done later. So, the WL data structure is unnecessary.

The algo picks an arbitrary production where work needs to be done is picked and treated. Consequently there is no guarantee that, once a production P is treated, first all others are checked to be treated or skipped, before it’s P’s turn again. In other words, this version is no longer round- or pass-based. Due to the randomness of which production to treat next, such a formulation is also called chaotic iteration.

So that algo checks where work needs to be one, and never does unnecessary update. Since the necessary work is done immediately, there’s no need to store it in a WL data structure, so one would not call it a worklist algorithm.

Actually, the chaotic iteration has the same problem than the round-based version before, which used a WL: doing the checks to avoid work is not worth it.

There is another silliness in the code, namely the fact that the union of the two first-sets is calculate calculated two times, one time for the check, the second time for the update itself. Actually, it’s worse. The algo has to find a production that needs treatment, and that may mean, it may search for one, repeatedly checking productions that turn out to need no treatment before finding one that does. This means, the attempt to focus on exactly the productions that actually need treatment to avoid all the places where it’s unnecessary multiplies the effort (at least in the chaotic iteration version).

For fairness sake: the random algo called chaotic iteration is not meant as template for a realistic solution. It is more an extreme case of approaching the problem. Note that being completely chaotic means, that, by chance, it could in some run behave like, for example, the round-based one. Since one can prove (under some assumptions we won’t discuss here) that chaotic iteration terminates with the correct result, one has, at the same time, established that all other specific strategies, like the round-based one, also work correctly.

Before finally addressing the real worklist solution, let’s summarize the insights so far. We have seen first a solution that treats productions unconditionally, without checking whether it’s needed or not. On the other side of the spectrum is a solution that never does unnecessary work, but checking whether work is needed or not did not improve things.

Needed is a way to

skip pieces of useless work (productions, slots in the table,… ) without searching for work and without checking whether it’s useless or not!

Actually, the round-based version using WL data-structure would also not be called worklist algorithm, because it does not make use of this core aspect of worklists.

Approximation of the work load and managing dependencies

To achieve that, the core trick is to approximate the work to be done. So the work list does not contain exactly the tasks that need to be done, but those that potentially require work. The only thing one is sure is: if some task is not in the worklist, it can be safely skipped.

So the worklist over-approximates a precise version of a worklist, which would be too costly to maintain. Besides that, it also avoid searching for work to be done, which is a bad thing to do, as we discussed in connection with the chaotic iteration.

Before we say, how to achieve that, we should clarify the following: when we said, a task is not in the worklist, in our case of the first-set, a production, and thereby can be safely skipped, then that is just a snapshot of the current situation. The worklist is ``worked-off’’ by the algorithm, i.e., the algorithm picks a piece of work from the worklist, which may be real work, leading to an update, or not. Then the work, i.e., the update is executed (and the piece of work removed from the work-list, as being done). However, the worklist does not only get shorter, it will typically also get larger. That means, some production where it’s clear it needs no treatment now (being not currently listed in the worklist), may be entered into the worklist later as suspicious of potentially in need of treatment. Indeed a production (or piece if work) may removed and be re-entered to the worklist many times. That is characteristic of worklist algos. An algorithm that uses a list and then trickles down the list from head to tail, treating one element after the other, is not called worklist algorithm, even if it does work and it uses a list. But it’s too trivial to deserve the name…

We have mentioned that treating a piece of works removes it from the worklist, as being done for now. We have still to explain how a piece of work is (re-)entered into the worklist. That has to do with the fact that some pieces of work depend on others. In our setting If we update the information on one non-terminal may make it necessary to (re)-consider other non-terminals, resp. productions for them, and thus (re-)add them to the worklist; again without checking if the re-added production means real work, or not. But because of that dependency, they are suspicious of potentially requiring work and thus added to the worklist.

If we look again at the table with the passes 1,2,3 for the expression grammar example, we see also that some productions, corresponding to lines in the table, in some pass require work, sometimes not, though the example is so simple that no production is targeted twice.

The dependencies form a graph. For instance in the expression example, the expressions depend on terms, terms depend on factors, and factors in turn depend on expressions. More precisely, the first-set of expressions depends on the first-set of the term non-terminal, the first-set of the term non-terminal depends on the corresponding information for factors, and finally there is the dependency of the first-set information of the expressions on that for factors.

There is no direct dependence of the information for factors on that for expressions (only an indirect dependence, and a dependence in the opposite direction). In the table, the information about factors, which is added in the first pass, reaches the terms in the second pass, but not the expressions yet, as there is no direct dependence and it takes a 3rd pass to propagate to expressions, and a 4th pass to find out, that all is stable by now.

A worklist algorithm would treat (productions for) terms as being directly dependent on factors, but expressions not. Thus updating information for factor would add term-productions to the work list, but not expressions. They may or may not be added depending on whether the term productions lead to an update to term or not (on this particular example, there will be at least one situation, where terms are updated, and thus expressions will be treated, as well, of course).

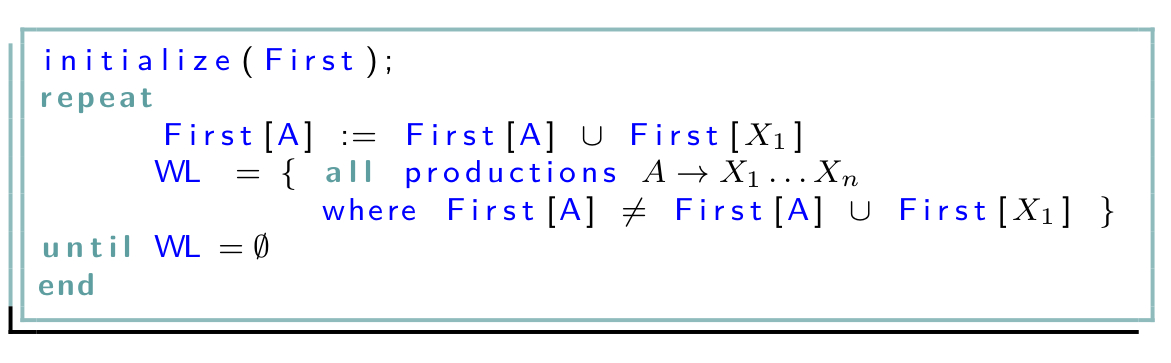

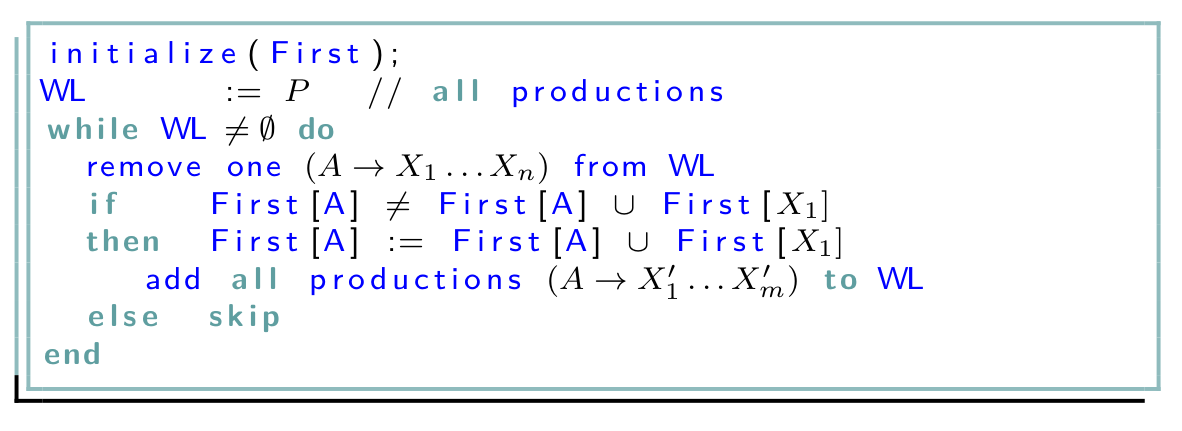

Finally the code

Without further ado, here some pseudo-code based on work-lists for the first-sets (without epsilon-transitions).

We said, one core trick is to avoid checking. As the code shows, of course there is still checking whether work needs to be done, but the real purpose of that check is not to avoid the corresponding update when not needed (though that is a consequence of the check), the real purpose is not to (re-)add some pieces of work to the work-list that potentially need update, because they depend on the current production. That is the purpose of the check. Note also, that this also avoids searching for work, at least to some extent. Since the work list contains places where work may or may not be required, working off the worklist may remove productions that are ok currently, before one finds one that needs treatment.

Of course, especially in the light of the discussions above, the code is slightly silly, calculating the union of the two sets with the current first set information two times, first for the check, and then again for the update. But that’s easy to avoid, storing the result in a variable.

Note in passing: in the semantic phase or static analysis phase, there are techniques that allow a compiler to detect situations like that, where the user is silly enough to evaluate a complex expression more than once, like in the previous code. The compiler would then use that information and transform the program, in the way sketched: calculate the result once, store it, and later reuse it (if that is that improvement).

In this particular piece of code, the reuse-the-result situation is pretty obvious: the expression is reused immediately after the first use. Sometimes it’s more complex, and one has to take also check if it’s a real re-use situation. Just because the same expression occur twice, one has to make sure that one really comes before the other (in the presence of loops and branches) and also that the result is still the same. The expression may contain variables, and those may change, at least in an imperative programming language. So, it’s a non-trivial analysis problem; it’s a data flow problem, which is an important class of program analysis. In this lecture, we will not dig deep into many different data-flow problems (there are very many), in particular we won’t elaborate on the one sketched here; that one is actually called available expression analysis. But we will discuss liveness analysis, which is another important data-flow problem.

As it happens, liveness analysis, available expression, and many data-flow analyses can well be solved by a worklist algorithm…. As mentioned, there are many problems, especially inside a compiler, where worklist algorithms are useful.